1. The EU AI Act Enters into Force on 1 August 2024 (Part 1)

(1) Introduction

As artificial intelligence (AI) rapidly advances and permeates every industry, the European Union (EU) has taken a significant step to address the challenges and opportunities presented by this transformative technology.1 The EU Artificial Intelligence Act (AI Act) creates the world's first comprehensive legal framework to address generative AI. While generative AI poses many opportunities, problems associated with it, such as infringement of intellectual property rights, the fear that white-collar jobs will be lost, and AI misuse and misinformation, have led to a deeper awareness of the risks posed by this technology.

The AI Act aims to promote the development and uptake of AI while ensuring that its use remains safe, transparent, and respectful of fundamental human rights.2 The AI Act is poised to have far-reaching implications for businesses operating within the EU, but as the first law of its kind globally, even businesses operating outside of Europe will likely be affected. Today, the United States, Canada, the United Kingdom, South Korea, India, Singapore, and other major AI powers are also beginning to consider what AI policies to adopt, and are closely observing the AI Act's rollout in the EU. This newsletter provides an overview of the key aspects of the AI Act, focusing on the implications for businesses navigating this new regulatory landscape.

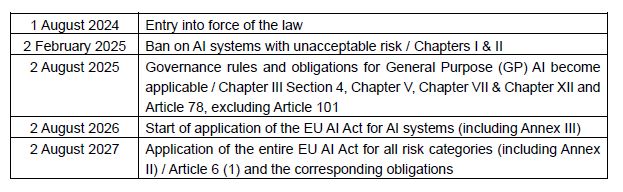

On 12 July 2024 the AI Act was published in the Official Journal of the EU. The text of the law is final and will enter into force on 1 August 2024. Its provisions will apply according to the staggered timetable below.

(2) A Risk-Based Approach

The AI Act defines an artificial intelligence system (AI system) as a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.3 This broad definition is meant to include forms of generative intelligence which do not currently exist. Building on this, the AI Act adopts different policies to regulating different AI systems, categorizing them according to the level of risk they pose to individuals and society. This approach is intended to allow for a more targeted and proportionate regulatory framework, ensuring that the most stringent requirements apply only to the highest-risk applications. Under the AI Act, individual AI systems are classified into one of four categories:

- Minimal risk: The majority of AI systems currently used by individuals are likely to fall into this category and will not be subject to specific regulation under the AI Act.

- Limited risk:4 AI systems with more limited risks, such as chatbots and deepfakes, will face transparency obligations to ensure that users are aware they are interacting with an AI system.

- High risk:5 AI systems identified as posing safety risks will be subject to strict requirements before they can be put on the market, and will continue to be highly regulated thereafter. An AI system is considered high-risk if it is used as a safety component of a product, or if it is a product itself that is covered by EU legislation, as well as if it falls into one or more of the following eight categories:6

- AI handling biometric information.

- AI operating critical infrastructure (such as digital, water, gas, electric, or traffic management systems).

- AI used in education (including student evaluation or monitoring of test-takers).

- AI used in job placement, hiring decisions.

- AI used in determining access to essential services (including healthcare, health insurance, and evaluations of credit worthiness).

- AI used in law enforcement.

- AI used in migration, border control, and asylum decisions.

- AI used in in legal interpretation and application of the law.

- Unacceptable risk:7 AI systems that pose a clear threat to safety, livelihoods, and fundamental rights will be banned. This includes AI systems that exploit vulnerabilities, manipulate behaviour, or conduct social scoring, as well as predictive policing and untargeted scraping of facial images from the internet or CCTV footage.

Moreover, the Act regulates all General Purpose (GP) AI models8 and some of them, considered GP AI models with systemic risk, are subject to additional requirements. In the next edition of our EU Law newsletter, we will further elaborate on the regulations imposed by the EU AI Act.

Part 2 of this newsletter on the EU AI Act will be published in August 2024.

2. Introduction of Recent Publications

- 'Chambers Global Practice Guides' on Cartels 2024 - Law & Practice - July 2024 (Authors: Shigeyoshi Ezaki, Vassili Moussis, Takeshi Ishida, Yoshiharu Usuki)

- Panel Discussion: AI Guidelines for Business Part I: Commentary on AI Guidelines for Business - July 2024 (Authors: Takashi Nakazaki)

- Legal Questions and Governance of Generative AI: Norms Shaping Tomorrow - July 2024 (Authors: Takashi Nakazaki)

- Chambers Global Practice Guides - Venture Capital 2024 - May 2024 (Authors: Keita Tokura, Takahiro Suga, Ryoichi Kaneko, Shogo Tsunoda)

- Adoption of Corporate Sustainability Due Diligence Directive - May 2024 (Authors: Koichi Saito, Wataru Shimizu, Suguru Yokoi, Ryoichi Kaneko, Mai Kurano, Itaru Hasegawa)

- Competition Inspections in 25 Jurisdictions - Japan Chapter - March 2024 (Authors: Yusuke Nakano, Vassili Moussis, Takeshi Ishida)

To view the full details please click here.

Footnotes

2 To read the Act in its entirety, see here: Regulation (EU) 2024/1689.

3 Regulation (EU) 2024/1689 Article 3: Definitions.

4 Regulation (EU) 2024/1689 Article 50: Transparency Obligations for Providers and Deployers of Certain AI Systems.

5 Regulation (EU) 2024/1689 Article 6: Classification Rules for High-Risk AI Systems.

6 Regulation (EU) 2024/1689 Annex III: High-Risk AI Systems.

7 Regulation (EU) 2024/1689 Article 5: Prohibited AI Practices.

8 Regulation (EU) 2024/1689 Article 51: Classification of General-Purpose AI Models as General Purpose AI Models with Systemic Risk.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.