The emergence of deepfake technology, particularly within the music industry, has sparked significant debate and concern. Deepfakes, which are synthetic media generated using AI to replicate and/or manipulate a known artist's likeness and/or voice, have the potential to revolutionise the music industry. While there are examples of legitimate uses of deepfakes, they can however also be created with malicious intent. This raises critical legal and ethical questions. In this blog, we explore the legal ramifications of deepfakes in the music industry, focusing on intellectual property rights and privacy concerns, and have a closer look at the regulatory framework to be provided under the AI Act.

Deepfakes and IP

At the heart of the legal challenges posed by deepfakes in the music industry are intellectual property (IP) rights. Musicians and vocal artists rely heavily on copyrights to protect their works and identities, while producers also have every interest in safeguarding their exclusive rights. So, when AI-generated deepfakes replicate or manipulate an artist's voice or create new songs in their style, several IP issues could arise including:

- Copyright Infringement: Deepfake technology can potentially infringe upon the copyright of original works. If an AI-generated song closely mimics an existing track, then it might be considered to be a derivative work, requiring prior approval from the original copyright holder.

- Moral Rights: Next to economic rights, under the Berne Convention artists are also granted moral rights, which include the right to attribution and the right to object to derogatory treatment of their work. Deepfakes that distort an artist's voice or style in ways they find objectionable could violate these rights.

- Right of Publicity: Many jurisdictions recognise the right of publicity, which grants individuals control over the commercial use of their name, image, and likeness. Deepfake audio that replicates a musician's voice for commercial purposes without consent could infringe upon this right.

Privacy concerns

Privacy issues are another significant concern with deepfake technology in the music industry. The unauthorised use of a musician's voice or likeness can lead to several privacy violations:

- Consent: The creation and dissemination of deepfakes without an artist's consent could lead to significant privacy violations. Musicians might find their voices used in contexts they did not consent to, which could damage their reputation and career.

- Data Protection: The process of creating deepfakes inevitably involves the collection and processing of personal data. Failure to comply with legislation like the General Data Protection Regulation (GDPR) could lead to significant legal consequences.

The AI Act

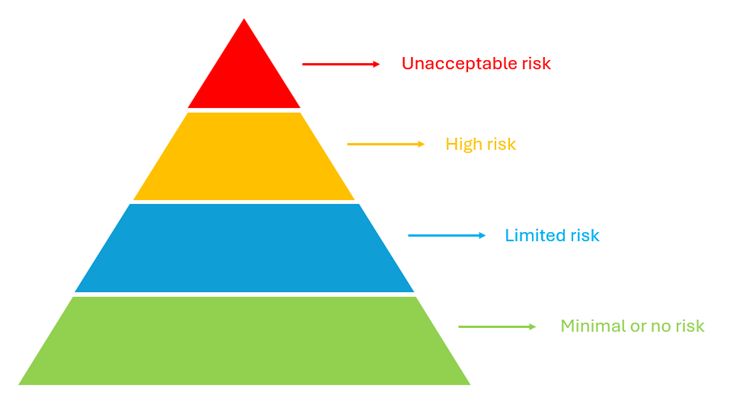

The long-awaited Regulation laying down harmonised rules on Artificial Intelligence (AI Act) was finally published on 12 July 2024, and will enter into force on the 20th day after publication. However, it will only generally apply after a 24-month transitional period, i.e. as from 2 August 2026, with the exception that certain rules will apply from an earlier date. It aims to establish a comprehensive regulatory framework for artificial intelligence, addressing both the opportunities and risks associated with AI. The AI Act classifies AI systems into different risk categories, ranging from minimal to unacceptable risk, with corresponding regulatory requirements.

- Limited risk: Deepfake technology is to be classified as limited risk; as for now, at least, it is not included in the AI Act's list of high-risk AI systems. This means that deployers of AI systems that generate or manipulate image, audio or video content to constitute a deepfake are bound by transparency obligations. More specifically, they will have to disclose that the content has been generated or manipulated using AI. However, the AI Act sets out that when the content forms part of an "evidently artistic, creative, satirical, fictional analogous work or programme", the transparency obligations are limited to disclosure in a way that does not hamper the display or the enjoyment of the work. This limitation makes sense when deepfake technology is used legitimately, but begs the question of whether it could leave room for ill-intentioned minds to seemingly adhere to the transparency obligations in such a way that disclosure goes by unnoticed by viewers or listeners.

- High risk: The AI Act does, however, 'leave the door open' for deepfakes to be marked as high-risk technology. To be categorised as such, deepfakes would have to pose a threat to society or to a natural person's health, safety or fundamental rights and be used in certain areas set out by the AI Act. Entertainment, for example, is not one of those areas, thus generally excluding deepfakes in the music industry from the high-risk category. In that regard, it is useful to point out that the right to privacy and to the protection of intellectual property are considered fundamental rights under the EU Charter, regardless of the area that an infringing product or party is used or operates in. Considering the harm that could be caused by deepfakes, one might wonder whether it would be more suitable to include deepfakes in the high-risk category under the AI Act, as it would subject creators and deployers of deepfake technology to more stringent requirements, including a third-party assessment before entering the market, robust risk management, strict transparency obligations and accountability measures.

- Enforcement and Penalties: The AI Act proposes significant penalties for non-compliance, including fines of up to 35 million EUR or 7% of a company's global annual turnover. This provides a strong incentive for entities using deepfake technology to adhere to the transparency obligations.

Conclusion

Deepfake technology presents both exciting opportunities and profound challenges for the music industry. From a legal perspective, the creation and use of deepfakes intersects with intellectual property rights, privacy concerns, and emerging regulatory frameworks, such as the AI Act. As this technology continues to evolve, it will be crucial for artists, industry stakeholders, and lawmakers to navigate these complexities to ensure that AI's benefits are realised while protecting the rights and interests of individuals and economic operators.

The AI Act represents a significant step forward in regulating AI technologies, including deepfakes. By fostering transparency and accountability, it aims at a more ethical and legally-sound integration of AI in various industries. Concerning the illegitimate use of deepfakes in the music industry, however, it seems that its effect will be limited, as no sufficient punitive measures are available, and wronged parties will be likely to have more success resorting to legal instruments in the IP and data protection domains.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.