Ethical artificial intelligence frameworks are still emerging across both public and private sectors, making the task of building a responsible AI program particularly challenging. Organizations often struggle to define the right requirements and implement effective measures. So, where do you start if you want to integrate AI ethics into your operations?

In Part I of our AI ethics series, we highlighted the growing pressure on organizations to adopt comprehensive ethics frameworks and the impact of failing to do so. We emphasized the key motivators for businesses to proactively address potential risks before they become reality.

This article delves into what an AI ethics framework is and why it is vital for mitigating these risks and fostering responsible AI use. We review AI ethics best practices, explore common challenges and pitfalls, and draw insights from the experiences of leading industry players across various sectors. We also discuss key considerations to ensure an effective and actionable AI ethics framework, providing a solid foundation for your journey towards ethical AI implementation.

AI Ethics Framework: Outline

A comprehensive AI ethics framework offers practitioners a structured guide with established rules and practices, enabling the identification of control points, performance boundaries, responses to deviations, and acceptable risk levels. Such a framework ensures timely ethical decision-making by asking the right questions. Below, we detail the main functions, core components, and key controls necessary for a robust AI ethics framework.

Main Functions

The National Institute of Standards and Technology (NIST) outlines four core functions essential for all AI ethics frameworks. These functions provide the foundation for developing and implementing trustworthy AI:

- Map: Outlines the purpose, goals, and expectations.

- Measure: Evaluates performance against objectives and introduces controls.

- Manage: Leads to optimization and adaptability of processes when risks or unforeseen circumstances arise.

- Govern: Involves continuous monitoring through oversight mechanisms to ensure efficiency, effectiveness, and compliance with regulatory requirements.

Figure 1: NIST's Risk Management Framework (RMF) Core functions for AI

These functions work together continuously throughout the AI lifecycle, enabling effective conversations surrounding the development and implementation of AI technologies (see Figure 1).

Core Components

Beyond the main functions, an AI ethics framework must include certain core components to be successful. These components ensure the framework is comprehensive and adaptable:

- Objective: Define a clear objective to guide the AI system.

- Controls and Metrics: Implement controls and metrics to measure the objective, ensuring compliance and effectiveness.

- Approach: Establish an approach for how to achieve the objective.

- Target: Set targets to signify whether the objective has been achieved.

- Oversight: Ensure third-party oversight to maintain accountability and compliance.

- Regular Revision: Regularly revise and adapt the framework to address new challenges and risks.

Incorporating these components helps organizations build a resilient and effective AI ethics framework that aligns with their strategic goals and ethical standards.

Key Controls

To ensure the effectiveness and trustworthiness of the AI system, it is important to consider specific key controls when building your AI ethics framework. These controls, integral to the "Measure" function, provide necessary oversight and protection:

- Attribution: Ability to accurately identify and credit the sources of information, data, or content generated to maintain the integrity of information.

- Security: Protecting AI systems against unauthorized access, manipulation, or exploitation to ensure privacy, integrity, and trustworthiness.

- Consent: Obtaining explicit permission before collecting, processing, or storing personal information to respect individual privacy.

- Legality: Ensuring AI systems comply with applicable laws, regulations, and ethical standards. Steps should be taken to mitigate legal risks associated with AI development, deployment, and usage.

- Equity: Fair treatment of all individuals, regardless of their background, characteristics, or circumstances, explicitly equal representation, opportunity, and accessibility throughout the development and deployment of AI systems.

- Transparency: Providing clear and understandable information to users about how the AI system works, makes decisions, and handles data.

- Bias: Addressing biases, including group bias (favoring one group over another), individual bias (unique thoughts/tendencies), and statistical bias (inaccuracies in results when working with datasets/algorithms).

Integrating these key controls into the AI ethics framework can ensure that organizations build AI systems which operate as intended and maintain the highest ethical standards.

By understanding and implementing the main functions, core components, and key controls, organizations can create a comprehensive and cohesive AI ethics framework. This framework will safeguard long-term success, foster trust, and ensure the responsible use of AI technologies, ultimately benefiting both the organization and society at large.

Best Practices

Comparing current AI ethics frameworks across private industry, government, and academia reveals varied approaches yet common themes. Predominantly, frameworks emphasize fairness, privacy, governance, and transparency, although depth and structure vary significantly.

Across sectors today, three common categories of AI ethics frameworks exist:

- Brief Concepts with No Depth: These frameworks outline ethical principles but lack detailed guidelines or actionable steps.

- In-Depth Thoughts in Specific Areas: Some frameworks offer strong and thorough guidance in particular aspects of AI ethics, such as data privacy or algorithmic fairness.

- Solid Framework Foundations: These frameworks provide comprehensive and structured guidelines covering multiple aspects of AI ethics, including governance and transparency.

While these three categories are prevalent, the following outlines where organizations are particularly excelling in their AI ethics approaches.

Best Practice #1: Establish Comprehensive Measurement Standards

Microsoft's Comprehensive AI Ethics Framework

Microsoft's AI ethics framework, building off NIST's AI Risk Management Framework, stands out for its comprehensive and detailed approach. Their 27-page Responsible AI Standard provides a robust set of resources that detail:

- AI Application Requirements: Specific guidelines for the ethical use of AI applications.

- Risks to Model and Application Layers: Identification and management of potential risks throughout different stages of AI development.

- Systematic Measurement Guidelines and Metrics: Standards for assessing ethical considerations and ensuring they are met.

- Risk Management Approaches: Strategies to mitigate identified risks effectively.

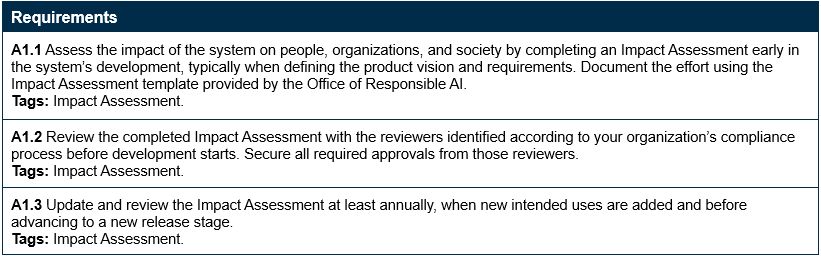

For example, Microsoft has created six goal types, each broken down into further subgoals, which outline the requirements needed to meet each subgoal (see Figure 2). This detailed approach ensures that ethical considerations are systematically measured and addressed throughout the AI lifecycle, promoting responsible innovation and ethical AI deployment.

Figure 2: Microsoft's Responsible AI Accountability Goal A1

The European Commission also excels in measurement by providing a comprehensive framework that addresses the structure, measurement, and governance of ethical AI. While many government entities outline aspirations for future AI ethical frameworks, the European Commission provides concrete guidance and systematic measurement, ensuring that ethical principles are integrated into AI development and deployment.

Best Practice #2: Implement Legal Mandates for Ethical AI

The European Commission's Approach

The European Commission successfully provides guidelines for ethical and robust AI by making legal obligations mandatory in the development, deployment, and use of AI. They define the foundations of trustworthy AI and principles that must be followed to be considered ethical, turn these principles into seven key requirements needed throughout the AI's lifecycle, and create an assessment to operationalize trustworthy AI. This legal framework ensures that ethical considerations are not just aspirational but legally binding, providing a robust foundation for trustworthy AI.

- Mandatory Legal Obligations: Ensuring AI ethics are integrated into laws.

- Foundations of Trustworthy AI: Defining core principles for ethical AI.

- Seven Key Requirements: Essential criteria for ethical AI throughout its lifecycle.

- Assessment Tools: Instruments to operationalize and ensure compliance with ethical standards.

Academic Contributions

Academic institutions are also contributing to legal mandates in AI ethics. Carnegie Mellon University's Responsible AI initiative at the Block Center translates research into policy and social impact, fostering educational and collaborative partnerships. These efforts help shape legal and regulatory standards, ensuring that AI technologies adhere to societal values and enhance human capabilities.

Carnegie Mellon University's Responsible AI Initiative

- Research Translation: Converting academic research into actionable policies.

- Educational Partnerships: Collaborating with various stakeholders to promote ethical AI.

- Policy Impact: Influencing legal and regulatory standards for AI ethics.

These efforts by both legal bodies and academic institutions ensure that AI technologies are developed and used responsibly, aligning with societal values and enhancing human capabilities.

Best Practice #3: Foster Effective Governance Structures

MIT's Pragmatic Approach

MIT excels in fostering a comprehensive and pragmatic approach to governance and regulatory standards in AI ethics. Their AI Policy Brief presents clear principles for ethical AI that prioritize security, privacy, and equitable benefits. MIT advocates for robust oversight mechanisms to ensure responsible AI deployment and emphasizes the need for extending existing legal frameworks to AI. This approach ensures that AI remains safe, fair, and aligned with democratic values.

Key Principles from MIT's AI Policy Brief

- Security: Ensuring AI systems are secure and resistant to misuse.

- Privacy: Protecting user data and respecting privacy rights.

- Equitable Benefits: Ensuring AI technologies benefit all segments of society fairly.

MIT underscores the importance of governance structures in maintaining accountability and compliance in AI systems. By integrating ethical principles within a structured but adaptable framework, MIT highlights the crucial role of governance in AI ethics. This approach ensures that AI technologies are effectively monitored and regulated, adapting to technological advancements while safeguarding ethical standards.

These efforts by MIT reinforce the necessity for a regulatory approach that evolves in tandem with technological progress, ensuring that AI development and deployment remain aligned with societal values and democratic principles.

Best Practice #4: Promote Human-Centric AI Development

Stanford University's Commitment

Stanford University's Human-Centered Artificial Intelligence (HAI) initiative stands out for its commitment to steering AI development to enhance human capabilities rather than replace them. Their focus on upholding integrity, balance, and interdisciplinary research ensures that AI technologies are developed with a keen sense of their societal impact. Key aspects of HAI's approach include:

- Integrity: Ensuring AI systems are developed and deployed ethically.

- Balance: Maintaining a balance between technological advancement and societal good.

- Interdisciplinary Research: Incorporating insights from various disciplines to address the multifaceted nature of AI ethics.

Key Principles of HAI

- Transparency: Advocating for openness in AI development processes.

- Accountability: Ensuring developers and users of AI systems are held responsible for their impacts.

- Diverse Perspectives: Incorporating viewpoints from various stakeholders to create inclusive AI systems.

By emphasizing human-centric development, Stanford's HAI promotes responsible AI that promises shared prosperity and adherence to civic values. This approach ensures that AI technologies are developed with the primary goal of benefiting humanity and upholding ethical standards.

Current State of AI Ethics Frameworks

Overall, most frameworks are still in a developmental phase, emphasizing general considerations over precise implementation guidelines. Common issues include:

- General Considerations: Many frameworks outline broad objectives but lack specific steps for implementation.

- Lack of Detailed Procedures: The absence of well-defined structures can make it challenging for organizations to interpret and apply the guidance provided.

- Inconsistencies: Addressing inconsistencies and integrating best practices from various entities can help achieve a robust and cohesive AI ethics strategy.

By addressing these inconsistencies and integrating best practices from various entities, organizations can achieve robust and cohesive AI ethics strategies.

AI Ethical Frameworks: Common Pitfalls

Across all sectors, AI ethics frameworks consistently encounter common pitfalls, including missing fundamental components, incorrectly interpreting controls, or lacking sufficient detail on controls. Identifying and addressing these pitfalls is crucial for the effective implementation of AI ethics.

Common Pitfall #1: Failing to Establish Comprehensive Measurement Standards

The nature of generative AI makes quantifiable measurement extremely difficult, especially on ethical concerns due to the inherent complexity, subjectivity, and dynamic nature of AI. Traditional machine learning algorithms have standardized methods for measuring accuracy and precision. In contrast, generative AI outputs are ever-changing and non-quantitative, such as text or images, making standard measurement challenging. Ensuring compliance with ethical and bias considerations adds another layer of complexity, often requiring human review.

Mitigation Tactic: Develop systematic measurement guidelines and metrics for decision-making to effectively address these challenges.

Common Pitfall #2: Lacking Rigorous Evaluation Processes

Many frameworks lack testing or validation processes, resulting in companies facing issues with inappropriate outputs from AI systems. For example, in February 2024, Google was forced to apologize after users started seeing unexpected results when using its publicly released Gemini AI tool. Image prompts involving specific groups of people resulted in offensive outputs, leading Google to disable the ability to generate images of people entirely. For months afterward, any request to generate an image that involved people would be met with: "We are working to improve Gemini's ability to generate images of people. We expect this feature to return soon and will notify you in release updates when it does." This incident highlights the need for more rigorous evaluation processes. Institutions must advocate for and participate in creating comprehensive validation frameworks that include diverse and extensive testing scenarios.

Mitigation Tactic: Implement rigorous evaluation processes to prevent incidents and ensure ethical AI deployment.

Common Pitfall #3: Neglecting Enforcement Mechanisms

Another significant issue in many frameworks is the absence of explicit enforcement or oversight mechanisms. Posting AI ethics guidelines is insufficient without incorporating those guidelines into development processes. An organization's framework must explain what enforcement mechanisms are in place and how they work. Many governmental AI ethics frameworks are aspirational and lack legal consequences. And while legislative and regulatory processes determine legal enforcement, organizations must plan for proper enforcement mechanisms in anticipation of actual codification. Academic institutions can also help by fostering a culture of accountability and collaboration with industry and government partners to ensure ethical guidelines are actionable.

Mitigation Tactic: Establish clear enforcement mechanisms and integrate them into the development processes.

Common Pitfall #4: Misunderstanding or Omitting Key Controls

Issues often arise around three categories of controls: privacy, bias, and legality. Either these controls are not present in current frameworks, or there is a misunderstanding of what they entail and how they need to be addressed.

- Privacy: Respecting individuals' rights recognized by laws or conventions and allowing control over their data. Privacy must be balanced with data access and usability, ensuring individuals' right to privacy is protected.

- Bias: Bias cannot be entirely removed from AI systems. There is often a misunderstanding between statistical bias and social bias that leads to unfair treatment of an individual or group. Technical bias in AI refers to the tendency of the system to reflect certain tendencies or preferences, which is not inherently negative. However, unwanted bigotry or unfair bias must be addressed. Ethical AI must uphold moral practices while acknowledging that some bias is inherent and necessary. To address bias, it is crucial to have individuals who understand the differences and the necessity of certain types of bias.

- Legal Compliance: Ensuring algorithms and procedures comply with applicable laws and regulations in every jurisdiction is challenging due to the evolving regulatory landscape. Continuous monitoring and adherence to legal standards are essential to prevent the negative consequences of unrestrained AI.

Mitigation Tactic: Correctly understand and incorporate key controls to address privacy, bias, and legal compliance effectively.

Addressing these common pitfalls is essential for developing robust AI ethics frameworks. By establishing comprehensive measurement standards, implementing rigorous evaluation processes, ensuring effective enforcement mechanisms, and correctly understanding and incorporating key controls, organizations can create ethical AI systems that operate responsibly and effectively.

AI Ethics Framework Implementation: Start Your Journey

In this paper, we defined what a framework is and examined its importance in mitigating the risks discussed in Part I. We explored challenges and common pitfalls in establishing an effective AI ethics framework, drawing insights from industry leaders and outlining key considerations for successful implementation.

To ensure ethics are central to AI implementation within your organization, it is essential to use a robust and actionable framework. Establishing an ethical AI framework requires concrete steps:

- Prioritize High-Impact Areas: Identify areas where ethical considerations are crucial.

- Translate Priorities into Measurable Goals: Define clear, actionable goals based on these priorities.

- Support with Key Performance Indicators (KPIs): Develop KPIs to effectively track progress and ensure accountability.

Part III of this series provides the A&MPLIFY roadmap, a comprehensive guide to help your business implement ethical AI practices. This roadmap will equip you with the tools and strategies needed to integrate ethics seamlessly into your AI initiatives, ensuring responsible and sustainable growth.

Originally published 27 August 2024

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.