LLMs and the prerogatives of explainability

Developed by leading technology firms in the world, with the most notable example being OpenAI's ChatGPT. LLMs are a type of artificial intelligence (AI) algorithm that utilizes deep learning techniques and large datasets to generate and predict new content.1 LLMs have demonstrated amazing abilities at generating conversations and coherent text in multiple domains such as the humanities, geopolitics, literature, physics, and computer science. GPT-4 has even passed medical, law, and business school exams, scoring in the 99th percentile of the 2020 USA Biology Olympiad Semifinal Exam2.

LLMs will have markedly improved accuracy and enhanced capabilities to assist researchers in expediting technologies...

The release and popularity of OpenAI's ChatGPT LLM has jolted many of the world's leading technology firms to develop their own LLMs. Google's PaLM, Baidu's Ernie Bot, and Meta's LLaMa have all sought to compete with ChatGPT in the LLM space, resulting in an AI arms race. While many developers train LLMs on text, some have started to train models utilizing video and audio input, flattening learning curves and resulting in faster development models. With more sophisticated training protocols, newer releases of LLMs carry the potential to markedly improve accuracy and enhance capabilities to assist researchers in expediting technologies and bringing them to market faster, as demonstrated by autonomous driving sensing regimes3 and revolutionary drug discoveries4.

However, a number of risks and uncertainties have arisen from the development of LLMs, resulting in algorithmic bias, misuse, and mismatches between production data and training data, or "drift". One particularly emergent point of concern of LLMs has been reconciling an LLMs training parameters and authenticity/trustworthiness, with the aim of minimizing "stochastic parrots" and hallucinations. Originally defined by Emily Bender, Timnit Gebru, Angelina McMillan-Major, and Marget Mitchell in their seminal paper, "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?", a language model is a "system for haphazardly stitching together linguistic forms from its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot."5 Stochastic parrots can thus be defined as repeating training data or its parameters without understanding its outputs while hallucinations occur when LLMs generate text based on their internal logic, resulting in context-based predictions that may sound correct but are factually inaccurate or contradictory. Therefore, understanding an LLMs explainability, such as ChatGPT-4's parameters and underlying logic, will prove immensely helpful for businesses when confronted with testing the relative creativity of LLM's against human innovation and ingenuity, providing AI implementers greater expertise in understanding the limits of training datasets.

An recent example of ChatGPT's outputs has recently been put forward in a recent paper by Christian Terwiesch, a professor of Operations, Information and Decisions at the Wharton Mack Institute for Innovation Management of the University of Pennsylvania, a WTW/WRN partner institution, demonstrating the relative advantageous yields LLMs have in ideational creation versus their human counterparts.

Novelty and generation: An ideational study

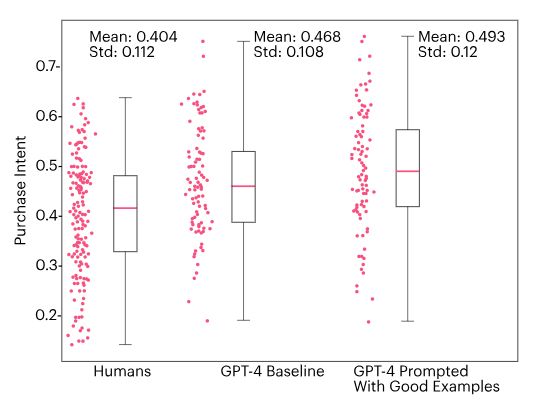

To test the potential of ideational excellence of LLMs vis-à-vis humans, Terwiesch et al6 compared three pools of ideas for new consumer products: the first pool was created by Wharton students enrolled in a course on product design prior to the availability of LLMs (in 2021); a second pool generated by ChatGPT-4 with the same prompt given to the first pool; and a third pool of ideas generated by ChatGPT-4 with the task as well as being provided a sample of ideas to enable in-context learning. Furthermore, Terwiesch sought to address how productive ChatGPT-4 was, what is the quality distribution of the ideas generated, and how can LLMs be used effectively in practice and what are the implications for innovation management?

Terwiesch utilized as a benchmark two hundred randomly selected ideas from a pool generated by Wharton's 2021 class and found that ChatGPT-4 generated two hundred ideas in 15 minutes7, of which one hundred ideas were not provided examples of good ideas and another one hundred after providing access to examples of good ideas. Such numbers contrast sharply with humans whereby humans have shown to be able to generate five ideas in 15 minutes, demonstrating the productive superiority of ChatGPT-4 to humans. Furthermore, to test and measure the ideational quality of ideas, the researchers used Amazon's MTurk platform to evaluate all four hundred ideas, with each idea evaluated twenty times and users asked to express purchasing intent. The average quality of ideas generated by ChatGPT-4 were rated more highly than ideas generated by humans while human ideas fared slightly better on novelty scores.

Purchase Intent by Idea Type

Source: LLM Working Paper, pg. 7

While the study had many limitations, it offers important lessons in innovation management and augmenting the human/machine synergy that will come to define a more productive and dynamic workforce in the future. Up until recently, creative tasks were the explicit domain of human beings. Apprehensions of AI thus far have been restricted to understanding the rationale behind its reasoning processes. A compelling need to understand the reasoning of such models serves two main purposes: first, to know why models generate the ideas they produce; and second, transparency in understanding the underlying generators which will lead to more sophisticated, qualitative, and expansive models (or in the case of GPT-4, understanding why it may be getting "dumber" with more inputs involved)8. First, to know why models generate the ideas they produce; and second, transparency in understanding the underlying generators which will lead to more sophisticated, qualitative, and expansive models. As iterations of LLMs continue to improve due to greater access to public/private data and data from third-party providers, AI feedback, and reinforced learning, multiple independent studies have shown LLMs have now crossed a crucial threshold in solving complex scientific and mathematical equations.

Implications for corporations and lessons for corporations

Corporates will now be able to utilize LLMs for tasks as varied as cyber risk mitigation, HR practices, user support queries, organizational resilience and corporate strategy, workflow automations, and embedded software applications for streamlined business operations. While many initially feared the effect of AI on highly automated, procedurally-simple "low skill" jobs, recent LLMs have thrown that old paradigm into doubt, with white-collar jobs now also under threat. The advanced logical reasoning and creativity of LLMs will have profound implications on realistic and knowledge-intensive tasks.

The advanced logical reasoning and creativity of LLMs will have profound implications on realistic and knowledge-intensive tasks.

However, in the short to medium term, current AI capabilities will create a "jagged technological frontier" where some tasks will be done by AI, while the rest will be performed by humans9. A recent Harvard Business School (HBS) study, in collaboration with Boston Consulting Group (BCG), has found the best strategy moving forward is a synergistic and symbiotic machine-human collaboration of humans upskilling into learning and adopting AI platforms such as ChatGPT. Utilizing 8% of BCG's global workforce, researchers found consultants who used ChatGPT-4 on twenty realistic tasks had a 40% improvement in quality, got 26% more tasks done, and completed them 12.5% faster.10 Given these figures, businesses will be hard pressed not to adopt such practices within their organizations as competition will invariably force a race towards greater efficiency and productivity in various industries and across multiple domains.

Corporations will soon have access to their own internal LLMs, similar to how intranets exist in conjunction with the larger internet. Risk mitigation strategies for internal purposes will depend on engineers planning proper evaluations, selecting and training datasets intentionally, engaging and incorporating high-level stakeholders in the creation, upkeep, and maintenance of LLMs, and having an AI guidance framework in place to deal with exigent circumstances and worst case scenarios.

Incorporating LLMs into bonafide use cases will result in more methodical assessments in risk enterprises, financial analyses and planning, fraud detection, enhanced innovation, increased worker productivity, and ideational creation. Retooling, upskilling, and adopting dynamic AI platforms such as LLMs and others will ensure businesses, executives, and workers stay on the cutting edge of implementation practices as AI will continue to disrupt how businesses engage with their customers and how society adapts to ever present disruptive technologies.

While the study had many limitations, it offers important lessons in innovation management and augmenting the human/machine synergy...

Footnotes

1. Large Language Models (LLMs). Return to article undo

2. ChatGPT is on its way to becoming a virtual doctor, lawyer, and business analyst. Return to article undo

3. What are Large Language Models (LLM)? Return to article undo

4. Large language models may speed drug discovery. Return to article undo

5. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? Return to article undo

6. LLM Ideas Working Paper. Return to article undo

7. Is ChatGPT a Better Entrepreneur Than Most? Return to article undo

8. Stanford scientists find that yes, chatgpt is getting stupider. Some researchers have found that ChatGPT may be getting "dumber", with updates to its model hurting the chatbot's capabilities. Return to article undo

9. Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality. Return to article undo

10. Using ChatGPT in consulting SSRN. Return to article undo

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.

We operate a free-to-view policy, asking only that you register in order to read all of our content. Please login or register to view the rest of this article.