We have explained the technical aspects of a large language model in part 17 of our blog. But what conclusions can we draw from this in terms of data protection? Does an LLM contain personal data within the meaning of the GDPR and the Data Protection Act? A proper answer to this question must take into account the intrinsic nature of such AI models as well as the needs of the data subjects. This part 19 of our AI blog series will provide an answer.

Executive Summary:

Whether or not personal data is contained in a large language model (and whether such a model produces such data) must be assessed from the perspective of those who formulate the input and those who have access to the output. Only if it is "reasonably likely" that these persons will formulate an input that leads to the generation of texts containing personal data can it be assumed that a language model contains such data (provided, of course, that the persons with access to it can also reasonably identify the data subjects). Whether it is reasonably likely depends not only on the objective possibility that such input will be written, but also on the interest of these persons to do so. This follows from the so-called relative approach and the further case law on the GDPR and Swiss DPA, whereby the input in the case of a language model is one of the means that make it possible to identify the data subject to which the factual knowledge of a language model relates, given that this knowledge can only be accessed via output and every output necessarily requires an input to which the model can react. For one user, a language model will not contain or even produce any personal data; for another, the same model will do so. In addition, it should be noted that not all personal data generated by the model is based on the factual knowledge stored in the model. It can also be merely a random result because the model does not have more probable content available for the output. In such cases, the output may contain personal data, but it is not in the model. This applies even if the data in question has been "seen" by the model during training. This is because the model does not "memorize" most of this data as such, but only information seen more often. Although there are other ways in science to infer the content of a model, for example by analysing the "weights" it contains, these are irrelevant for answering the question of whether it contains personal data and which data, because according to the relative approach it only depends on how the respective user of the model uses it - and this user normally accesses it via a prompt (i.e. input). The different phases in the "life" and use of a large language model must therefore also be regarded as different processing activities for which there are different controllers. Those who have trained the model are not automatically responsible for data protection violations by its users – and vice versa.

At first glance, the answer to the question in the lead seems clear: it must be a clear "yes", especially as we all know that we can also use a language model to generate statements about natural persons – without the names of these persons being mentioned in the prompt. If you ask ChatGPT or Copilot who the Federal Data Protection Commissioner (i.e. the head of the Swiss data protection supervisory authority) is, you will find out that his name is Adrian Lobsiger – and you will learn a lot more, even that he was a public prosecutor and judge in the Swiss Canton of Appenzell Ausserrhoden (both of which are wrong, but we will discuss how to deal with such errors in another blog post).

This is why most data protection professionals and authorities take it for granted that language models contain personal data without asking any further questions. No distinction is made as to whether a language model has merely picked up the identifying elements in the output from the prompt ("Write me a love letter for Amelia Smith from Manchester, my first love from kindergarten"), whether the chatbot has the personal data from an Internet query or whether it really has been sourced from within the language model. No distinction is made as to whether they are completely random results or originate from factual "knowledge" contained in the language model.

The answer to this question is by no means trivial. As far as we can see, this question has also barely been discussed to date. Yet, there are now some representatives of EU supervisory authorities who, according to reports, even go so far as to claim that data protection law (specifically: the GDPR) does not apply to large language models at all, which implies the assumption that they do not contain any personal data (see here). Conversely, the blanket assumption that an LLM as such is always subject to data protection – including the right of access and the right to be forgotten – is not correct, either, because it does not take essential elements of such models into account. For example, it would mean that anyone who stores a model on their system (which many people do without even knowing) automatically becomes a controller with all the consequences. Once again, the truth (and reasonable solution) lies somewhere in the middle.

Delimitation of responsibility required

First of all, the question of whether a large language model is subject to data protection is basically the wrong one. Data protection obligations apply to persons, bodies or processing activities, but not objects. So, if we ask ourselves whether the EU General Data Protection Regulation (GDPR) or the Swiss Data Protection Act (DPA) applies to an LLM, we have to ask which party this question refers to. The answer may be different depending on whether it is answered from the perspective of the person who trains the LLM, the person who keeps it stored on their computer, the person who uses it indirectly by accessing a service such as ChatGPT or Copilot, or the person who makes it available as a service in the cloud, as hyperscalers do for their customers.

We have noticed that the distinction in responsibility between the creator of an LLM and the various forms of its users, which has already been propagated last year, is becoming increasingly established in practice. This also makes sense: the training of an LLM is a different processing activity in relation to personal data than the use of an LLM to generate output in which personal data can be found. If data protection has been violated when creating an LLM (e.g. by using unauthorised sources), the person who uses the LLM and whose output does not contain personal data that relates to such violation cannot be held responsible for the violations that have occurred in an earlier and separate processing activity. Also, an LLM generally does not, as such, store the personal data it has been shown during the training (more on this below). If you want to know more about the technical aspects of an LLM, you should read our blog post no. 17.

This does not mean that there are no circumstances in which a downstream entity that uses such an illegally trained LLM can be held responsible for the upstream controller's breach of the law. There are cases where this could happen, but they presuppose, at least in terms of data protection, that the earlier breach is somehow continued in the form of personal data that is being processed by the downstream entity at issue. The reason is that data protection obligations are linked to personal data, and dependent on its existence. If such data no longer exists in this form, the responsibility under data protection law ends, as well. The doctrine of the "fruit of the poisonous tree" does not apply in data protection beyond the specific "poisoned" personal data.

Independent processing activities

For each processing activity in the life cycle of a language model, it must therefore be determined independently who is considered the controller and who is not, or who may be the processor. The consequence of this is that the person who trains an LLM correctly in terms of data protection law, in principle, cannot just because of such training be held responsible for infringements that a separate controller later on commits with it. For example because erroneous output is used without verifying its accuracy or because a model is used to generate unlawful output. The only link between the two, that could result in liability of the former for the latter, would be the act of disclosing the LLM to such subsequent controller.

A distinction must also be made between the different users in the same way. When companies A, B and C access the LLM stored at a hyperscaler D, there are three different processing activities that occur. Company A is not responsible for what B and C do with the same model, and D will generally not be responsible for these processing activities either, as it will usually only act as their processor. D is ultimately only responsible for the storage of the model on its servers – provided that the model contains data from the perspective of D, which is by no means clear.

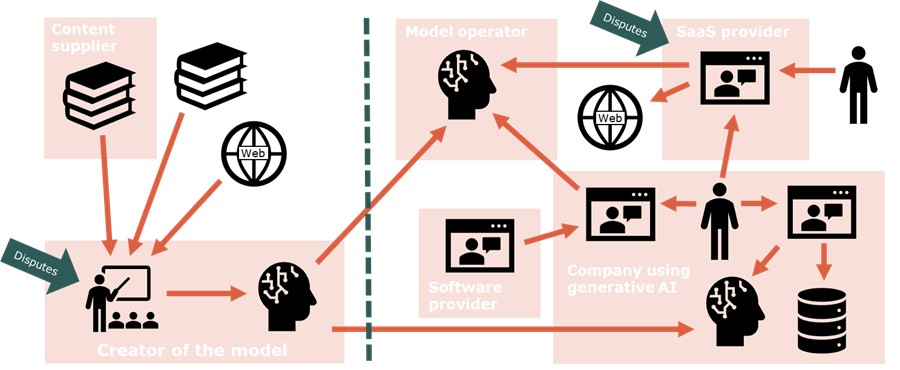

As the following diagram illustrates, there are several distinct areas of responsibility in practice: One is the creation of the language model, others are making it available in a cloud (as Microsoft, AWS, Google and OpenAI do, for example), offering AI services to the general public (such as ChatGPT, Copilot or DeepL), various forms of deployment within companies (with models used locally but also access to those in the cloud or via AI services) and the development of AI solutions:

In particular, companies that basically only use AI for their own purposes will be happy to hear that most of the legal disputes that language models have led to so far primarily concern those that train such models or offer them as SaaS services on the open market and are accessible to a wide audience. The demarcation between creation and use of an LLM in the sense of a legal "firewall" shielding both sides is becoming increasingly clear. This is good news insofar as this reduces the practical risks for the mere users of AI. Although we expect to see court rulings that find that the creation of an LLM has infringed upon third party rights and fines issued to some of the big names in the AI market for violations of data protection, we predict that these developments will not fundamentally alter the way these technologies are used and spread.

Definition – the relative approach

This brings us to the core question of this discussion: Does a large language model contain personal data? We will answer this question with regard to large language models that are based on the Transformer technique, as described in our blog post no. 17, which you should read for to aid your understanding of the following considerations.

To answer the question, we have to dissect the definition of personal data as per the GDPR and the DPA. We have to be careful not to rely on the understanding of the term that has been propagated by many European data protection authorities in the past, as it is overbroad and not supported by current law: Neither is singularisation sufficient for identification (it is only one element of identification), nor is it sufficient that an identification can be made by any person and not only by those with access to the data (also known as the "absolute" or "objective" approach).

Rather, we rely on the interpretation of the ECJ for EU law, namely in "Breyer" (C-582/14) and most recently in "VIN" (C-319/22), and the Swiss Federal Court for the DPA, as in "Logistep" (BGE 136 II 508): Whether a piece of information is considered personal data can only be assessed from the perspective of those with access to the information at issue. If the data subjects to whom the information relates can be identified by these parties with access, then the information is personal data (for them), otherwise it is not. This is also known as the "relative" approach. Whether the controller itself can identify the data subjects is not essential; anyone who discloses information that is not personal data for them, but is for the recipients, is nevertheless disclosing personal data to a third party and must therefore comply with data protection with regard to such processing activity (this is also how the reference to third parties in para. 45 of the decision C-319/22 is to be understood).

The persons are only identifiable if the necessary means are "reasonably likely to be used", to cite Recital 26 of the GDPR. As a certain degree of probability is therefore required, a theoretical possibility of identification (as wrongly assumed by various EU data protection authorities in the "Google Analytics" decisions) is not sufficient. The Swiss Federal Court already established this years earlier for the DPA by defining two components (BGE 136 II 508, E. 3.2 ff.): It is not enough that identification is objectively possible with a certain amount of effort (objective component). The interest in making the effort required for identification also has to be considered and, thus, sufficient (subjective component).

The interest in identification

At first glance, this Swiss view does not appear to be entirely the same as laid out in the recent "VIN" decision of the ECJ. According to the latter, it is sufficient that the means for identification for the party with access to the data are "reasonably likely to be used" (C-319/22, para. 45, with the German version saying "reasonably ... could be used") and that such party can be regarded as someone "who reasonably has means enabling (identification)" (para. 46), but not whether the party actually uses these means. However, since an objective standard applies to the "reasonableness" test that has to be applied, the difference between the Swiss and the ECJ approach will be small to irrelevant in practice.

Even if the ECJ did not define what it means by "reasonably" in "VIN", it is clear from common language and expertise that the interest in identification will be decisive, as it is precisely this interest that determines the effort a party makes for this purpose, at least from an objective point of view (as required by the GDPR). Anyone who has no interest in identifying a data subject will – reasonably – not make any effort to do so, as there is no benefit to justify the effort. This does not rule out the possibility that an organisation could nevertheless obtain data to identify a data subject. However, if it takes effective measures against this happening, for example by deleting such identifying information or only processing it in such a way that it cannot be used for identification, this must be taken into account when considering which means of identification it "reasonably" has at its disposal. It makes a difference whether someone has information in a form that can be used for identification or whether someone has such information, but cannot practically use it for identification. To describe the situation with an example: If the information for identification is somewhere in a large, disorganised pile of documents, but the owner has no idea where, they will only search through the whole pile if their interest in the information is large, and only then do they "reasonably" have the means to identify the data subject. So even under the GDPR, we are in line with the formula of the Swiss Federal Court, according to which it all depends not only on the means available, but also on the interest in obtaining or using them.

The "motivated intruder test" of the UK supervisory authority ICO also relies on the interest in identification when answering whether data has been truly anonymized, i.e. no longer constitutes personal data for anyone: It asks whether a "motivated attacker" would be able to identify the individuals without prior knowledge using legal means and average skills. While it presupposes interest in obtaining and using these resources the extent to which such interest exists must be determined for each individual case. The criteria for doing this includes, among other things, the attractiveness of the data. And where people with additional knowledge or special skills typically have no interest in using these means, this can also be taken into account according to the ICO.

The definition of personal data is therefore basically the same under the GDPR and the DPA. As a result, the question of whether certain information constitutes personal data cannot be answered in absolute and general terms, but only in relation to those parties that have access to it, and secondly, it must be assessed whether they have relevant means of identification available to them, which in turn depends on their interest in identification, which determines the effort they will objectively make to obtain and use these means of identification.

Application to large language models

These findings on the concept of personal data can be applied to large language models and provide corresponding answers.

As a first step, it should not be contentious that anyone looking inside a large language model will not see any personal data. Such a model contains a dictionary of tokens (i.e. words or parts of words) and first and foremost many numbers. So, if you get your hands on a file with an LLM and don't know how to make it work and have no interest in doing so, you have no personal data (which doesn't mean that you are out of scope of data protection law if you were to give the file to someone who has this knowledge and interest).

Although there are other ways of determining the "knowledge" content of an LLM other than simply using it (see, for example, the research results from Anthropic here), this is not yet relevant to the practical use of an LLM, which is why we will not look any deeper into this here. Anyone who does not use such methods to look inside a language model when using an LLM does not have to be judged on the basis of the findings that would be possible with it when it comes to the question of whether their application is subject to data protection.

According to the relative approach, whether a large language model contains personal data must be assessed solely from the perspective of the user and those who have access to the output. This is because only they create the input and can identify the data subjects in the output generated from it. Therefore, only the way in which they use the language model in question can be relevant. This will typically be via a prompt, i.e. the formulation of the input.

If they cannot reasonably be expected to provide input that generates output relating to specific data subjects, or if those with access to the output do not reasonably have the means to identify those data subjects, then the use of the language model in their hands will not result in personal data and the data protection requirements will not apply in this case. Whether the model would be able to produce personal data under other circumstances is irrelevant under such circumstances.

However, even if personal data is to be expected in the output (and therefore the handling of the output is subject to data protection), this does not say anything about whether such data is also contained in the model itself. This aspect needs further consideration.

Factual knowledge through associations seen in the training material

To begin with, a machine does not have to contain personal data in order to generate it. A computer programme that randomly invents combinations of first and last names and outputs a text with an invented date as a birthday corresponding to these names generates personal data, at least if the recipients assume that the information actually relates to a natural person. However, if the recipients know that the person does not exist, there is no data subject – and without a data subject, there is no personal data.

The situation is similar with language models, except that they are not programmed by humans and are much more complex. The output that an LLM generates is also not part of the model itself, but only the result of its use – just as the random generator just mentioned does not contain the finished texts that it outputs, but creates them anew each time from a stock of non-personalised building blocks, based on an understanding of how language works and which terms fit together and how. An LLM by definition provides knowledge about language, not about general facts. Nevertheless, knowledge about how sentences are created and how words and sentences on certain topics typically sound or how they fit together inevitably also includes associated factual knowledge. It is more or less diffusely contained in the LLM as associations and affinities. If it is triggered with the right input, it can manifest itself in the output if the probabilities are right.

However, we must be aware that an LLM is not a database in which facts are stored that can be easily retrieved as long as the correct query command is given. An LLM is basically a text generation machine that attempts to "autocomplete" a certain input and uses empirical values from training to do so. If you ask a common LLM when Donald Trump was born, the answer will be "On 14 June 1946". If you ask who was born on 14 June 1946, the answer will be "Donald Trump". On the other hand, if you ask who was born in June 1946, you will sometimes get Trump as the answer, sometimes other people who were not born in June 1946 (but had their birthday then), sometimes others who were actually born in June 1946, depending on the model. The models have seen the date 14 June 1946, the word "birthday" or "born" and the name Donald Trump associated with each other very frequently during training and therefore assume that this pairing of words in a text is most likely to match, i.e. they have a certain affinity with each other and therefore form the most likely response to the prompt. The models do not "know" more than this. In particular, they do not contain a table with the birthdays of celebrities that can be looked up for the response. Only the right combination of input and the numbers in the model (as well as certain other parameters) produces the output. Each of these elements on their own will not produce anything close.

A language model contains personal data ...

The practical difficulty for the user of an LLM (and thus also for data protection) is that they generally do not know which parts of the output represent factual knowledge and which parts are invented, because an LLM itself also does not distinguish between fact and fiction, but only between more or less probable reactions to the respective input.

Whether the output generated by a system has very low probabilities (and is thus likely a "hallucination"), whether a part of the output it generates has been an obvious choice for the LLM due to the training material (and therefore a "good" output is generated from its point of view), but it is factually incorrect (because the training material was already incorrect), or whether the system recognises that it cannot give a reliable answer and therefore does not generate a factual answer, depends on its initial training and its other "programming" (such as the so-called alignment or the system prompt).

Today, there are ways of visualising the associations and affinities that are preserved in an LLM (see the research work by Anthropic cited above - they refer to "features" of a model). However, an LLM cannot know whether its answer is factually correct because it does not "think" in such categories. The fact that it nevertheless often formulates its answer as if it were proclaiming established facts is one of the main causes of the problems we have today with the use of language models. We are very easily deceived by self-confident formulations that sound as if they come from a human being.

However, even if language models are not technically designed to store factual knowledge about individual, identifiable natural persons and therefore personal data, this can still occur, as shown in the example of Donald Trump. Even the association between Trump, his birthday and 14 June 1946 will not be a 100% probability in the respective models, but ultimately this is not necessary for it to be the most probable choice for the output. It is clear to everyone that this information, and therefore personal data, is somehow "inside" the models in question. For this reason, the statement that the large language models do not contain any personal data is not correct in this generalised form.

... but not any data it saw during training

Conversely, it is also incorrect to assume that the large language models contain all the personal data that they have "seen" during training. This is not possible already in terms of quantity alone: GPT3, for example, requires 350 gigabytes of storage space. However, it was fed around 45 terabytes of data during training. AI researchers like to describe the training of language models as "compressing" the training content so that it fits into the model. However, from a data protection perspective, this can mislead people to assume that the original information content and therefore the personal reference is retained. This is normally not the case; in the case of GPT3, a compression by a factor of 128 took place when looking at it from a purely mathematical point of view, whereby the focus was on the preservation of linguistic knowledge, not factual knowledge.

Usually, only information (e.g. references between words that have a common meaning) that occurs frequently in the same way or is specific enough to become a fixed association or affinity and "survive" this compression process. In other words: The compression is very, very "lossy", i.e. most of the details are lost. However, even science does not know exactly how much a language model remembers. Even if a piece of information survives this training process, it is not clear in what form and it is also not clear whether the content is correct – even if it is only due to errors in the training material or because the association or affinity was incorrect due to other circumstances. Instead of distinguishing between fact and fiction, the model provides for more or less strong associations and affinities between the individual elements of language, which results in a probability distribution when the model is asked to calculate the next word for a sentence that has been started.

The above makes it clear why, from a data protection point of view, it is not really decisive for the assessment of a language model whether certain personal data has occurred in the training material. If at all, relevance would only be given if it can be shown that the information in question has survived in the model as such as a relevant association and affinity, which depends above all on the frequency of its occurrence. Conversely, it cannot be concluded from the fact that certain personal data did not occur in the training material that the training process did not create any person-related associations or affinities in the model due to any coincidence, i.e. assuming, for example, that there is a clear connection between a name, birthday and a certain date. If a person is always mentioned together with another person in public texts, this can lead to a reciprocal association of attributes of the two persons. The profession, title or place of residence of one person is attributed to the other and vice versa.

The literature repeatedly refers to studies and methods (see for LLM e.g. here and the related blog post here) that make it possible to determine whether certain information - including personal data - has been used to train a model (usually referred to as "membership inference attacks"). It is emphasised that these attack methods pose a risk to data protection because they can be used to extract training content. This overlooks the fact that in the models in question, the training content can be found in the output even without an "attack" if the input is suitable, because the model has "seen" it sufficiently often during training; it is the phenomenon of "memorization", i.e. the model remembers a particual content seen during the training, such as Donald Trump's date of birth. In terms of data protection law, corresponding personal data is therefore contained in the model anyway if corresponding inputs are to be expected. The issue here is therefore the confidentiality of the training content and not data protection compliance of a model in general. If the training content is not confidential (or does not have to remain anonymous), the possibility of such attacks is irrelevant. We will explain what this means for the training or fine-tuning of models in a separate blog post.

A model does not simply contain what it generates

Following these explanations, we now want to answer the question of whether a large language model itself also contains all the personal data it generates in the output.

The answer is "not necessarily". Take the birthday of another public figure, namely the data protection activist Max Schrems. His organisation has filed a data protection complaint against OpenAI because its "ChatGPT" service incorrectly displayed the date of birth of a prominent person (Max Schrems?). Indeed: If the OpenAI model "GPT-4o" from OpenAI is given the input "When is Max Schrems' birthday?", it with a temperature of 0.0 states the 9 October 1987, with 0.5 the 14 October 1987 and with 1.0 the 3 October 1987 (all tests done in German).

These outputs show that the model seems to "know" the year and month of birth of "Max Schrems" (we leave it open here whether this is correct), but it has to "guess" the day. The variations with regard to the particular day indicate that the model does not have an association with "Max Schrems" on a particular day that is significantly stronger than the association with other days. The temperature has an effect as a parameter on the probability distribution of the possible answers (here concerning the days in October 1987) in particular in cases where the probabilities are rather similar, i.e. if no number is particularly probable. In practice, such "guessed" information can also be identified by slightly varying the input. For example, the question "What is Max Schrems' date of birth?" in GPT-4o (in German) results in 11 October 1987, i.e. another different date, if the temperature remained the same.

If the model lacks such a clear association, we cannot conclude that the information in question is contained in the model. It appears that it is rather not contained in it, just as the random birthday and name generator described above does not contain the personal data it generates. The fact that the model nevertheless produces a statement as if it knew what it is talking about is not the result of knowledge, but of how a language model is designed to act (we will discuss separately what this means from a data protection point of view). So while the building blocks for generating a date in the model for "Donald Trump" in response to the question about his birthday are obviously so close together within the model that they occur regularly in the output, there is no such building block for the day nearby "Max Schrems", which is why the model has to extend its search to the wider environment of "Max Schrems" and his birthday in order to find a number when generating an output, figuratively speaking.

It highlights a weakness of many current applications of language models: they are designed to always provide an answer, even if they have to provide answers that are not very probable. In such cases, they fill the gaps in their "knowledge" to a certain extent by inventing the corresponding information. Although the information is not completely plucked out of the air, it does not have a reliable basis. Depending on the application, such behaviour may be desirable or at least unproblematic, but it may pose a problem where facts are expected or assumed to exist.

One possible solution to this problem is the use of so-called confidence thresholds, i.e. the systems are programmed in such a way that they either only produce an answer if the systems are rather certain of it or they indicate how certain they are of the individual statements. In the case of deterministic AI – i.e. systems that specialise in recognising or classifying certain things – such values are commonly used. In the field of generative AI, however, this is not yet very common. In our view, it should be used more often. For example, a chatbot can be programmed so that it only provides an answer if it is relatively certain of it. It is, however, not clear how high the probability must be for something to be considered (supposed) fact instead of (supposed) fiction.

Moreover, this probability is also only in relation to the training material: If Max Schrems' date of birth from our example appears incorrectly in the training data with sufficient frequency, the LLM will produce it with a high degree of certainty and still be wrong. Of course, this does not change the data protection qualification of such information being personal data; incorrect information can be personal data, too (we will discuss whether such information is actually considered incorrect under data protection law in another blog post).

There is a further complication: If the model is not asked for the birthday of "Max Schrems", but "Maximilian Schrems", GPT-4o (in the German version) will with a relatively high probability output 11 October 1987. With this long form name, the association with the "11" is much stronger than with its short form, and it can be concluded that this date is indeed contained in the model. This may be because the training content with Mr Schrems' long name contained the date or otherwise made a special reference to the number "11" in the context of "birthday". The model cannot easily distinguish between these things. For the model, the names "Max Schrems" and "Maximilian Schrems" are strongly associated with each other, but with regard to the date of birth they lead to different results. It will therefore be important to work with such differences in both the right of access and the right of rectification.

Finally, there is one more point to consider: Of course, not every part of the output that a model generates with a high probability in connection with the mentioning of a specific person can be considered as personal data stored in the model about this person. The high probability or confidence must relate to information triggered by the occurrence of the person concerned (i.e. by their identifiers such as name, telephone number or e-mail address). Only then is the high probability an indication that factual information and a specific natural person (or his or her identifiers) are sufficiently close or associated with each other to be considered personal data in the model. If a model has the task of generating a children's story in which Donald Trump plays the main role, only limited information in the output will within the model have an association with him, even if the model produces the output with a high degree of confidence and even if the entire story appears to be Trump's personal data. In order to determine such a personal association within the model, the model will need to be "triggered" in a specific manner to eliminate the impact of other elements in the output as much as possible. We will address this topic in more detail in our blog post on the right of access in connection with LLMs.

The relative approach to the use of language models

The analysis of whether a language model contains personal data is not yet complete with the above. It also takes into account how likely it is that there will be input at all that is capable of retrieving personal data in the model. This results from the relative approach and the fact that the input is one of the "means" that is necessary to identify any personal data of a person contained in the model. If there is a vault full of personal data, but no one with access to the vault has the key, there is no personal data for these entities or they have no data protection obligations when handling the vault (apart from possibly the obligation not to make it accessible to any unauthorized person with a key). In a large language model, the user's input assumes the function of this key, as shown. Consequently, no personal data is available in relation to the language model as long as no input can reasonably be expected that is capable of extracting the personal data contained in the model in the form of output.

At this point, we can make us of our above finding, that in order to answer the question of whether such input is "reasonably likely" (and also the possibility of identifying the data subject), it is not only the objective possibility of the users of the model that counts, but also their subjective interest in formulating such input. This makes a big difference in practice: Objectively speaking, every user of a large language model can in principle create any input - all they have to do is to type it in. In practice, however, they will only do so if there is sufficient interest in doing so.

Therefore, anyone who uses a language model in a controlled environment with prompts which, according to general judgement, are unlikely to lead to the generation of personal data, because the user has no interest in such prompts (e.g. because this is not suitable for the use case) does not have to worry about data protection with regard to either the language model or the output. This would not change even if, by chance, an input nevertheless led to an output that could be regarded as personal data because, firstly, the user of the model would not readily recognise it as such (as in the case of the random birthday generator) and, secondly, only the use of means of identification (including the prompt in this case) that are reasonably likely to lead to personal data are relevant. This presupposes a certain degree of predictability, which is not the case here.

The situation will be similar for a company that uses language models to generate content with personal data, but this data does not originate from the model itself, but from the input, be it from the user's prompt or other data sources consulted as part of an application with Retrieval Augmented Generation (RAG). In these cases, the language model is only used to process language and it is not the purpose of its use to supplement the output with factual knowledge or personal data from the model.

Conversely, a provider such as OpenAI, who makes its LLM available to any number of people, must also expect a correspondingly broad variety of prompts and therefore assume that a corresponding broad amount of personal data will be generated by the model. It is yet unclear whether the provider must expect any number of prompts or only certain prompts. Taking into account, that the means of identifying a person must be "reasonably likely", it should not include every single prompt, even in the case of widely used chatbots such as "ChatGPT" or "Copilot". A provider of such a chatbot will have to assume that its users will ask the chatbot about public figures. However, if we take the "reasonably likely" standard serious, they will not have to assume that users will use it to query factual knowledge about any other person. Consequently, they could take the position that their models do not contain any personal data about these other people - until it is shown that such prompts do occur, of course. For such providers, the above findings therefore only provide limited relief.

Max Schrems would be such a public figure. So, if he complains that his date of birth is incorrectly included in ChatGPT, the model would indeed have to be checked for this accusation. As shown for GPT-4o, this would presumably reveal that the date of birth as such is not actually included in the model because there is no clear association in the model, which is why it invents a day of the month when asked about his birthdate. It appears that factual knowledge only exists with regard to the month and year of birth.

As mentioned, the output that the chatbot would generate in response to the question about Max Schrems' date of birth must be separated from this. According to the above, it will in any event be personal data because such a question is to be expected with a public chatbot such as "ChatGPT" and "Copilot", even if the operator of the chatbot would not ask it himself. It is sufficient that its users do so and that its system discloses the corresponding data to them. We will discuss in a separate blog post whether this is actually to be regarded as incorrect under data protection law if Mr Schrems' date of birth is incorrect.

To sum up: It is possible that an output contains personal data, but the underlying language model does not.

Conclusions for large language models

In order to determine whether and which personal data a language model contains pursuant to the GDPR and Swiss DPA, in summary, the following steps must be considered:

- In the specific case, who formulates the input for the large language model used and who has access to its output?

- Is it "reasonably likely" that (i) these persons will use the language model with inputs that cause the model to generate outputs with personal data that do not originate from the input itself, and (ii) those with access to such outputs will be able to identify the data subjects concerned. When determining whether it is reasonably likely, the interests of these persons in creating such input and identifying the data subjects has to be considered, not only the objective ability to do so.

- To the extent that the model generates such outputs with personal data about a particular data subject, do these vary as the input about that individual and the temperature is varied, as well, or do they always remain the same in substance? In the latter case, this indicates that they are actually included in the model with a strong association to the data subject and that the data in question are not merely "gap fillers" due to a lack of better alternatives. In those cases, they are presumably contained in the model as personal data, and are not only included in the output by sheer coincidence or for reasons unrelated to the data subject.

Since the probabilities in steps 2 and 3 apply cumulatively, the overall probability of the presence of personal data of certain individuals in the model itself is normally correspondingly low. It results from the multiplication of the two individual probabilities. The above test steps can be used, for example, for a data protection risk assessment if a company is planning to use a language model and wants to demonstrate that any personal data that may be contained in a model does not pose a risk to the company because it will not be retrieved.

Conversely, in order to assert data protection claims against a language model for personal data contained therein, a data subject or supervisory authority will have to show that in the particular case the intended audience of the language model (step 1) is reasonably likely to use an input which, from the user's point of view, generates personal data about them in the output (step 2) and that the information in dispute is not invented, but contained in the model in a manner related to the person (step 3).

In our next blogs posts, we will explain the impact this has on the question of how data subjects can access the personal data processed about them and how this relates to the accuracy of an output and how the right of rectification can be implemented.

PS. Many thanks to Imanol Schlag from the ETH AI Center for reviewing the technical aspects of the article.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.